In January

I wrote a post on whether detecting heat could supplant vision, and concluded that it was, in fact, just a form of sight. I wished to tackle echolocation next, but wondered where to start: with echolocating animals in fictional biology? Other possible questions would be which atmosphere would be best, which frequencies to use, how it can be compared with vision, etc. In the end I decided to start -there's more!- with a post on the nature of echolocation; so here we go...

The basic principle is simple: you send out a sound and if an echo returns, there is something out there. As everyone knows, dolphins and bats are expert echolocators., but it is less well known that

some blind people are quite good at it, and that they in fact use their occipital cortex to process echoes, a brain region normally busy with analysing visual signals. That direct link between vision and echolocation is perhaps not that surprising, as both senses help build a spatial representation of the world outside: what is where?

A major difference between vision and echolocation is how distances are judged. In vision, judging distances depends on complex image analysis, but in echolocation the time between emitting a sound and the arrival of the echo directly tells you how far an object is away. The big problem here is that echoes are much fainter than the emitted sound. The reason for that is the

'inverse square law', something that works for light as well as for sound.

Click to enlarge; copyright Gert van Dijk

The image above explains the principle. Sound waves emanate from a source near the man in the middle and spread as widening spheres (A, B and C). As the spheres get bigger, the intensity of the sound diminishes per 'unit area'. A 'unit area' can be a square meter, but can also be the size of your ear. When you are close to the source your ear corresponds to some specific part of the sphere, and when you move away your ear will correspond to a smaller part of the sphere: the sound will be less loud. Now, the area of the sphere increases with the square of the distance. If you double the distance from the source, the area of the sphere increases fourfold, and the part your ear catches will decrease fourfold. To continue; increase the distance threefold and the volume decreases ninefold. Move away ten times the original distance from the source, and the sound volume becomes 100 times smaller!

In the image above, only a tiny fraction of the original sound will hit the 'object', a man, at the left. Not all of that will bounce back, and the part that is reflected forms a new sound: the echo. The echo in tun decreases immensely before arriving at the sender, and that is the essence of echolocation: to hear a whisper you have to shout.

Click to enlarge; copyright Gert van Dijk

As if the 'inverse square law' is not bad enough, there is another nasty characteristic of echolocation. At the left (A) you see a random predator using echolocation. Oh, all right, it's not random, but Dougal Dixon's 'nightstalker' (brilliant at the time!). It sends out sound waves (black circles) of which a tiny part will hit a suitable prey; there's that man again. As said, the echoes travel back while decreasing in strength (red circles).

There will be some distance at which a prey of this size can just be detected. Any further away and the returning echoes will be too faint to detect. Suppose that this is the case here, meaning 10m is the limit at which a nightstalker can detect a man (as mankind is extinct in the nightstalker's universe no-one will be hurt).

Here's the catch: most of the sound emitted by the nightstalker travels on beyond the prey. These sound waves can be picked up easily by other animals further away than 10 meters (I assume you recognise the creature listening there; it's pretty frightening). For animals out there the sound only has to travel in one direction and none of it gets lost in bouncing back from the prey. The unfortunate consequence of all this 'shouting to hear a whisper' is that the nightstalker is announcing its presence loudly to animals that it cannot detect itself!

This suggests that echolocation could be a dangerous luxury. One way to use it safely would be if other predators cannot get to you anyway. Is that why bats, up there in the air, can afford echolocation? Another solution would be to be big and bad, so you can afford to be noisy? If so, echolocation is not a suitable tool to find a yummy carrot if you are an inoffensive rabbit-analogue. The carrot does not care, but the wolf-analogue will.

---------------------

Getting back on topic, we now know that echolocation tells you how far away an object is. To make sense of the world you will also need to know where the object spatially: left and right and up and down. With hearing this is more difficult than with vision, but it can be done. The spatial resolution of bats is one or two degrees (

see here for that), which is impressive but still 60 to 120 times less good than human vision. For now, let's take it for granted that an echolocating animal can locate echo sources. Next, let's try to visualise what it may be like.

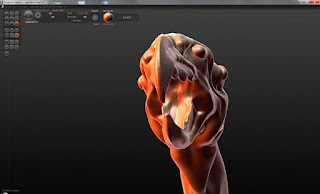

Click to enlarge; copyright Gert van Dijk

Here is a scene with a variety of objects on a featureless plain. The objects have transparency, colours, shadows, etc. At one glance we see them all, as well as the horizon, the clouds, etc., without restrictions regarding distance, all in high resolution. The glory of vision, for all to see.

Click to enlarge; copyright Gert van Dijk

Colour is purely visual, so to mimic echolocation it has to go. All the objects are now just white. They are also all featureless, but that is for simplicity's sake only: vision and echolocation can both carry information about things like wrinkles and bumps, so I left texture out.

Click to enlarge; copyright Gert van Dijk

In sight the main source of light is the sun shining from above, but in echolocation you have to provide your own energy. To mimic that, the only light source left is at the camera. The resulting image looks like that of a flash photograph, for good reasons: the light follows the inverse square law, as does sound. Nearby objects reflect a lot of light (sound!) for two reasons: they are close by, and part of their surfaces face the camera squarely, turning light directly back at the camera. This is an 'intensity image'.

Click to enlarge; copyright Gert van Dijk

However, you can see nearby and far objects at the same time, but that is not true for sound. Sound travels in air at about 333 m/s, so sound takes about 3 ms to travel one meter. An object one meter away will produce an echo in 6 ms: 3 ms going to the object and 3 ms travelling back. The image above shows the same scene, but now the grey levels indicate the distance from the camera. Light areas in the image are close by, dark areas are further away. This is a 'depth image', formed courtesy of the ray tracing algorithms in Vue Infinite.

Copyright Gert van Dijk

Now the scene is set to mimic echolocation. Let's send out an imaginary 'ping'; each interval in time determines how far away an echo-producing object is. For instance, the interval from 6 to 12 ms after the 'ping' corresponds to objects 1 to 2 meters away. While the depth image tells us how far away objects are, the intensity image tells us how much of an echo is produced there. To make things easier for the human eye a visual clue was added: echoes returning early are shown in red, while those returning later are blue. Above is a video showing three successive 'pings'. As the echoes bounce back, areas close by will light up in red, and objects furet away will produce an echo in blue, later on. I blurred the images a bit to mimic the relatively poor spatial resolution of echolocation.

I personally found it difficult to reconstruct a three-dimensional image of the world using such images, but my visual system is not used to getting its cues in such fashion.

Copyright Gert van Dijk

One easy processing trick to improve the image is to remember the location of early echoes. The video above does that, by adding new echoes without erasing the old ones. The image is wiped as a new ping starts. More advanced neuronal analyses could take care of additional clues such as the Doppler effect, to read your own or an object's movement. By the way, the above is in slow-motion. In real life echoes from an object 10 m away would only take 60 msec to get back. Even without any overlap you could afford 16 pings a second for that range. That is not bad: after all, 20-25 frames a second is enough to trick our visual system into thinking that there is continuous movement.

So there we are. Is this simple metaphor a valid indication of what echolocation is like? Probably not, but it does point out a few basic characteristics of echolocation. Echolocation must be a claustrophobic: no clouds, no horizon, just your immediate surroundings. It would seem the meek cannot afford it, as it may be the most abrasive and abusive of senses.

Is it therefore completely inferior to vision? Well, yes and no...